Here are

some applications that you can freely download. They come with no guarantee!

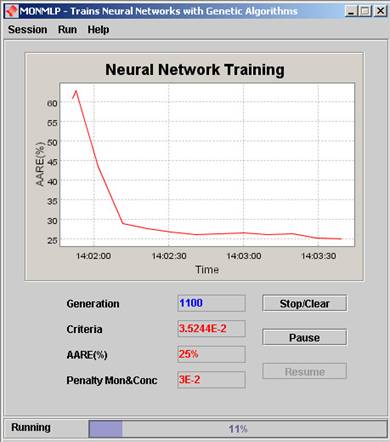

1) Mono-Concave Multilayer Perceptron Builder

(uses genetic algorithms to train neural

networks with specified signs for 1st and 2nd order

derivatives of the output with respect to some of its inputs)

![]() MONMLP-guide.pdf

(a short user's guide)

MONMLP-guide.pdf

(a short user's guide)

(A java user friendly version of the algorithm. Requires Java

1.4). After unzipping the MONMLP.zip file you may run the application by executing

the file monmlp.bat (Windows only). In a Linux

environment you may launch (from the directory

where the application was unzipped) the following command: java -classpath jfreechart-0.9.4.jar:jcommon-0.7.1.jar:.: Interfata

For more

details on how this algorithm works please consult the second chapter of the

thesis.

2) Pattern

recognition functions tools

The package tarcafun contains several useful functions

implemented in MATLAB® which applies to problems of pattern recognition.

Download first tarcafun.zip and unzip it. Set your Matlab

path to point to the directory where you unzipped the archive. Now you may use

the following functions:

|

Function Name |

Summary Description |

Help |

|

featureSelector |

Is a feature selection algorithm for CLASSIFICATION and REGRESSION. The relevance criterion is J=alpha*AR+gama*ADC where Accuracy Rate (AR) is obtained by crossvalidation with a k-nearest neighbor classifier

(CLASSIFICATION only) and ADC is the Asymmetric Dependency Coefficient from

information theoretic framework. The combinatorial optimization method is

"plus l take away r". This function

indicates which among the columns of a matrix X are best suited to map y by

ranking them in the decreasing order of their importance. |

|

|

featureSelectorInit |

This function

is almost similar with the function featureSelector

(see help for details) with the exception that it may start with a initial guess of features which are relevant. |

|

|

itss_ADC |

Computes

an Asymmetric Dependency Coefficient (Information theory) between a feature

variable X and a response variable y. |

|

|

lau_confmatrix |

Returns the confusion

matrix for a classifier. |

|

|

lau_knn |

Implements

a k-nearest neighbor algorithm. This is a simple classification rule which

assigns for a new point x the class in which are found most of the k nearest

neighbors of x in the training data set. |

|

|

lau_knn_crosserr |

Computes the performance

of a k-nearest neighbor classifier by n-fold crossvalidation.

The global misclassification rate, the confusion matrix and its standard

deviation are computed. At each fold, a disjoint fraction of data (1/cvsets)

is predicted while the remaining fraction (1-1/cvsets) is used as prototypes.

The confusion matrix is computed for each fold and summed up to form the

global confusion matrix, gen. |

|

|

weight_eval |

Interprets the weight

matrices of a trained feed-forward neural network. Gives as return the

importance (saliency) index for each of the inputs of the neural network.

After an idea by Garson G. D.(1991) extended for

multi output networks. |